From dorms to trout ponds: Leveraging waste heat from AI computing (podcast included)

|

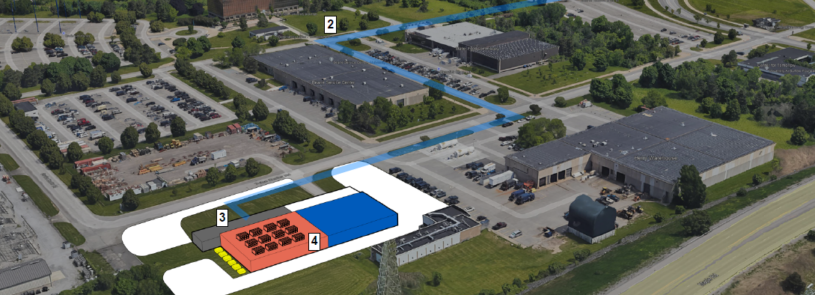

Campus Energy Reuse The illustration above shows phase 1 of a campus energy reuse project. Excess energy from the high performance computing facility (1) is delivered via buried chilled water infrastructure (2) to the chilled water return (3) within the central utility plant (4). The energy is then distributed in the form of direct heat via the existing campus heating distribution or via a modern 5th generation district system that utilizes heat pumps and distributed source/sinks. |

By Ken Urbanek and Brandon Fortier

The ever-greater energy consumption of modern high performance computing facilities—think energy-intensive AI deep learning computing—presents significant operational challenges and costs for owners. Such facilities also present a unique opportunity: a high degree of potential for waste heat recovery. In a campus situation, this excess heat can be used as an energy source for other buildings, greatly supporting overall sustainability and greenhouse gas reduction goals.

This strategy is not only beneficial for private sector data centers, but also for higher education, healthcare, and federal institutions with a high-performance computing facility on campus. Even institutions without such data centers are considering upgrading to AI computing and leveraging the excess energy to aid their long-term sustainability initiatives. (High-computing facilities also have found energy synergy with nearby, unrelated operations, providing conditioning for greenhouses and even a trout farm pond.)

Achieving the desired computing power capacity, system reliability, energy efficiency, and facility uptime requirements depend on the data center’s mechanical and electrical systems. To meet the cooling needs of AI computational equipment, liquid cooling technologies—e.g., immersion, and direct-to-chip—are replacing standard air cooling, which is ill-suited for ever increasing high-density power demands. These high-performance cooling systems generate significant waste heat, which can be recovered and pushed into district energy systems for use in the heating of neighboring facilities. This heat recovery could be in the form of direct heat into the existing campus heating distribution, or it could be the catalyst for the deployment of a modern 5th generation district system that utilizes heat pumps and distributed source/sinks.

IMEG has been designing state-of-the-art computing facilities for many years and is working with several clients who are planning or considering an AI computing facility. For these types of projects, owners need to consider multiple factors beyond synergistic energy use, including:

- Utility services: Confirming that utility electrical service capacity is or will be available to the building for both day one and for future growth

- Space programming: Committing a large portion of the programmed space to generators, chillers, UPS, switchgear, etc., to meet the needs of the high density of compute and the high overall capacity

- Criticality: Determining the number of redundant components and pathways needed within the data center

- Cooling needs: Specifying high-density cooling—typically direct-liquid cooling (DLC) and rack-specific cooling like rear door coolers—to adequately cool high rack densities that push beyond 100 kW/rack in some cases

- Energy and water efficiency: Fine-tuning system selection to ensure power use effectiveness (PUE), water use effectiveness (WUE), and other sustainability goals are achieved

- Resiliency and flexibility: Providing the facility with the flexibility to respond to the ever-changing landscape of the computing world, where AI is still in its infancy

By considering all factors and making well-informed decisions, owners can ensure that their AI computing facility will achieve their objectives in the most energy-efficient, cost-effective way possible—and potentially gain the benefit of a new source of campus energy.

Learn more on the IMEG podcast: